Latest posts

Cut Out the Middle Tier: Generating JSON Directly from Postgres

PostgreSQL has built-in JSON generators that can be used to create structured JSON output right in the database, upping performance and radically simplifying web tiers.

4 min readGetting Started with PGO, Postgres Operator 5.0

An important design goal for PGO 5.0 was to make it as easy as possible to run production-ready Postgres with the features that one expects. Let's see what it takes to run cloud native Postgres that is ready for production.

4 min readThe Next Generation of Kubernetes Native Postgres

We're excited to announce the release of PGO 5.0, the open source Postgres Operator from Crunchy Data. While I'm very excited for you to try out PGO 5.0 and provide feedback, I also want to provide some background on this release.

6 min readLogging Tips for Postgres, Featuring Your Slow Queries

Today we're going to take a look at a useful setting for your Postgres logs to help identify performance issues. We'll take a walk through integrating a third-party logging service such as LogDNA with Crunchy Bridge PostgreSQL and setting up logging so you're ready to start monitoring and watching for performance issues.

8 min readFun with pg_checksums

Data checksums are a great feature in PostgreSQL. They are used to detect any corruption of the data that Postgres stores on disk. Every system we develop at Crunchy Data has this feature enabled by default. It's not only Postgres itself that can make use of these checksums.

10 min readPostgreSQL on Linux: Counting Committed Memory

By default Linux uses a controversial (for databases) memory extension feature called Overcommit. How that interacts with PostgreSQL is covered in the Managing Kernel Resources section of the PG manual.

6 min readBetter Range Types in Postgres 14: Turning 100 Lines of SQL Into 3

Almost a decade after range types were introduced, Postgres 14 makes it easier to write "boring SQL" for range data. Meet the "multirange" data type.

5 min readDevious SQL: Run the Same Query Against Tables With Differing Columns

We spend time day in, day out, answering the questions that matter and coming up with solutions that make the most sense. However, sometimes a question comes up that is just so darn … interesting that even if there are sensible solutions or workarounds, it still seems like a challenge just to take the request literally. Thus was born this blog series, Devious SQL. (Devious - longer and less direct than the most straightforward way.)

6 min readBetter JSON in Postgres with PostgreSQL 14

A feature highlight of the new JSON subscript support within PostgreSQL 14.

5 min readSimulating UPDATE or DELETE with LIMIT in Postgres: CTEs to The Rescue!

There have certainly been times when using PostgreSQL, that I’ve yearned for an UPDATE or DELETE statement with a LIMIT feature. While the SQL standard itself has no say in the matter as of SQL:2016, there are definite cases of existing SQL database dialects that support this.

10 min readPGO 4.7, the Postgres Operator: PVC Resizing, GCS Backups, and More

We're excited to announce the new version of PGO, the open source Postgres Operator from Crunchy Data version 4.7! There's a lot of really cool features that make it easy to deploy production Postgres clusters on Kubernetes.

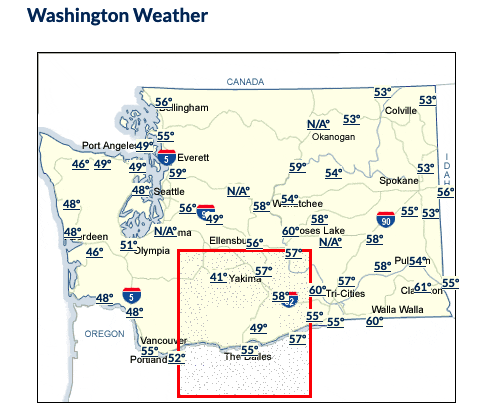

5 min readWaiting for PostGIS 3.2: ST_InterpolateRaster

A common situation in the spatial data world is having discrete measurements of a continuous variable. Every place in the world has a temperature, but there are only a finite number of thermometers - how should we reason about places without thermometers and how should we model temperature?

4 min readTLS for Postgres on Kubernetes: OpenSSL CVE-2021-3450 Edition

Not too long ago I wrote a blog post about how to deploy TLS for Postgres on Kubernetes in attempt to provide a helpful guide from bringing your own TLS/PKI setup to Postgres clusters on Kubernetes. In part, I also wanted a personal reference for how to do it!

7 min readCreating a Read-Only Postgres User

Learn how to create a read only user in Postgres both now and with a look ahead to Postgres 14.

7 min read(The Many) Spatial Indexes of PostGIS

The simple story of spatial indexes is - if you are planning to do spatial queries (which, if you are storing spatial objects, you probably are) you should create a spatial index for your table.

6 min readUsing Kubernetes? Chances Are You Need a Database

Whether you are starting a new development project, launching an application modernization effort, or engaging in digital transformation, chances are you are evaluating Kubernetes. If you selected Kubernetes, chances are you will ultimately need a database.

4 min readChoice of Table Column Types and Order When Migrating to PostgreSQL

An underappreciated element of PostgreSQL performance can be the data types chosen and their organization in tables. For sites that are always looking for that incremental performance improvement, managing the exact layout and utilization of every byte of a row (also known as a tuple) can be worthwhile.

9 min readAnnouncing Google Cloud Support for Crunchy Bridge

Crunchy Data, the leading provider of trusted open source PostgreSQL, is proud to announce the addition of Google Cloud Platform (GCP) to its list of providers supported on Crunchy Bridge.

4 min readYour Guide to Connection Management in Postgres

Connection pooling and management is one of those things most people ignore far too long when it comes to their database. As you grow into the hundreds, better connection management is a quick and easy win. Let's dig into the three variations of connection pooling and how to identify if you can benefit from a connection pooler and where.

5 min readPostgres is Out of Disk and How to Recover: The Dos and Don'ts

The ultimate goal for any unplanned database interruption is to reduce data loss. So the advice here is aimed at someone in a production situation prioritizing minimizing data loss.

12 min read

.png)

.png)

.jpg)

-1.png)

.png)

.png)

.png)

.png)

.png)

.png)